Slow down a moment. Why are you doing this? That’s not a normal situation. I wonder if a better solution is available, given that you are unfamiliar with this setup.

I don’t think you really need two complete instances of Redash pointed at the same metadata database. In fact doing this can produce strange bugs around database locking.

To actually answer your question:

I understand. It would be helpful for you to understand a little about docker-compose going into this. Particularly around how networking behaves. I’ll give you a tiny summary here, but you can always check out their docs for something deeper.

In a typical network, each computer receives an IP address from a DHCP server. Sometimes these addresses change. Other times they are fixed or static. For common resources like a database or an email server, the DHCP server can be configured to always give the same IP address to that machine. So a database could always be found at 172.20.16.20 for example.

When you create a service in docker-compose, each service will receive its own IP address. And it’s a lot of hassle to configure a DHCP server to always grant each one the same IP address every time it starts. So what if the services need to speak to one another?

For this, Docker allows you to configure networks using the name of a service. Whenever one service speaks to another, the configuration doesn’t need to include an IP address. It can use the name of the service instead.

Looking at Redash’s default configuration, you can see that it uses service names instead of IP addresses. For example, the REDASH_REDIS_URL is defaulted to redis://redis:6379/0. That’s because the name of the service is redis. So even if the service restarts and gets a new IP address every time, the config file doesn’t require an update.

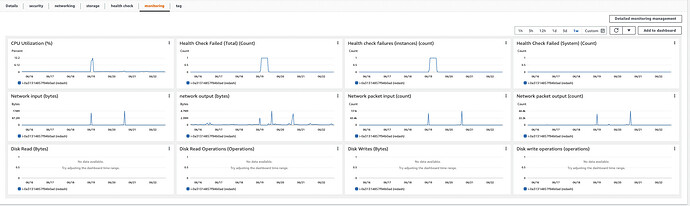

It does not, unless you update both of your docker-compose files to use externally accessible URLs. Because right now you have two completely unique instances of Redash. And both of them are talking to their own copy of postgres and redis. If you want them to use the same postgres, you need to configure your Amazon settings so that postgres always has the same IP address. And then you need to configure both instances to use the same one.

As I wrote above, this is not a good idea. Proceed at your own risk.