Are there any ways to get SQRT in queries from existing queries?

It seems SQLite, which is used in queries from existing queries, doesn’t support SQRT and I found the extension at the bottom of this page (http://www.sqlite.org/contrib) might help, but I don’t know how to install it into Redash.

Or, are there any other ways?

I’m also hoping to use other math functions like STDEV, so maybe it’d be the best if I could install the math extension above.

I resolved this by myself.

I compiled the C file as below (in case of Ubuntu)

gcc -fPIC -shared extension-functions.c -o libsqlitefunctions.so -lm

and then added the following two lines in query_results.py

connection.enable_load_extension(True)

cursor.execute(‘select load_extension("/home/ubuntu/libsqlitefunctions.so");’)

Please let me know if there are better ways.

hey! I am facing the same problem: want to use SQRT function in sqlite.

I am using the docker installation, I think I can compile that C file by:

gcc -fPIC -shared extension-functions.c -o libsqlitefunctions.so -lm

But how can I edit the query_results.py file? As I understand it, I can’t edit the source code in a docker image, since it is built already, right? Is there any other way out? Thanks for any help!

I’m assuming you added the gcc compile step to your Dockerfile. You can update query_results.py the same way. Just copy the file from our git repo, save your code changes alongside the Dockerfile (on your file system), and then add this directive to it:

ADD query_results.py /app/redash/query_runner/query_results.py

Then Redash will use your edited file instead of the default.

Did you get this to work? GCC works inside the redash server container, but I get the following error

fatal error: sqlite3ext.h: No such file or directory

According to Here, this error is associated with the lack of the libsqlite3-dev library. However, I can’t install libsqlite3-dev because I don’t have sudo access inside the container. Also can’t use nano to edit the query_results.py file because nano also isn’t available in the container.

Would love to here how you did it.

Check out the guidance earlier in the thread. You don’t edit the file within the container. You edit the file on your local disk and then link it into the container. Or if you’re using the local development Docker setup you can just edit the source file within the repo directly ![]()

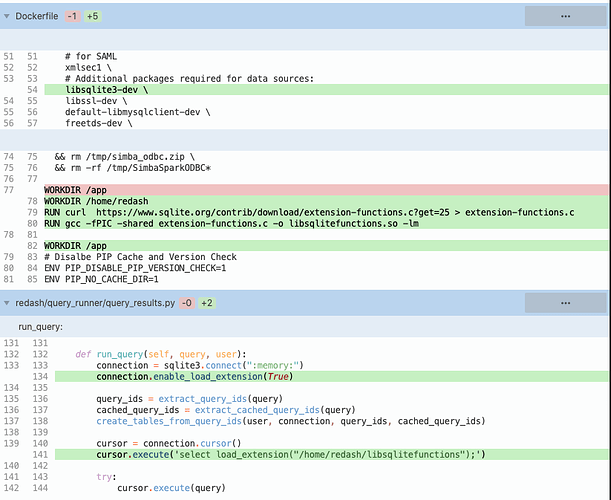

I made a commit to my fork of redash that shows exactly the changes you need to make:

I’m pasting the git diff here as well:

After making these changes to your Dockerfile and query_results.py just run docker compose build and then restart your containers with docker compose up -d.

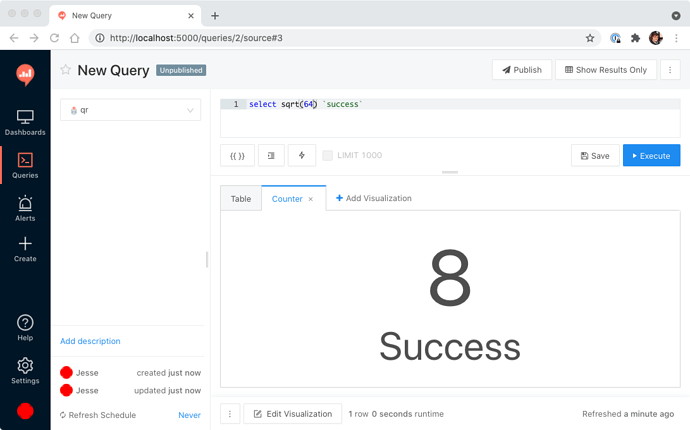

Confirmation it works:

Thanks @yuzwyy for writing up the basics. Hopefully this guide helps others in the future.

Thanks. I was working on a digital ocean marketplace redsah image. I built the .so file (renaming it as you did in your dockerfile). Building did generate one small error, but the .so file was built. I copied it into the home folder of the docker image as you had in your dockerfile and then I copied the updated query_results.py file over the old one in the container. That didn’t have an immediate effect so I ran docker-compose up -d. That crashed the server. Then I rebooted, the server came back “up” but wasn’t accessible and the system load never came back close down to less than 1 even after 10 minutes. Not sure if I just went wrong somewhere or if loading the mathfunctions ends up eating up a lot more than my 2 GB-1CPU droplet could handle.

I also tried spinning up your fork on a digital ocean droplet with docker. There were a few errors during the docker compose build and when I tried access myurl:5000 I found "sqlalchemy.exc.ProgrammingError: (psycopg2.errors.UndefinedTable) relation “organizations” does not exist

LINE 2: FROM organizations ".

I would really like to get this working but it might be just beyond my capabilities at the moment.

Sorry to hear you’re frustrated with this. I think you’re very close though. Just a single command.

This just means the metadata database wasn’t created. You have to run the create command:

$ docker-compose run --rm server create_db

You mentioned there were build errors though? What were they?

So if I run

docker-compose run --rm server create_db

the login screen setting the administration password does appear. However, once a try to login I get

Bad Request

The CSRF session token is missing.

Here are all the errors that pop up during the build:

debconf: delaying package configuration, since apt-utils is not installed

debconf: delaying package configuration, since apt-utils is not installed

WARNING: Running pip as the ‘root’ user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

ERROR: After October 2020 you may experience errors when installing or updating packages. This is because pip will change the way that it resolves dependency conflicts.

We recommend you use --use-feature=2020-resolver to test your packages with the new resolver before it becomes the default.

httplib2 0.19.1 requires pyparsing<3,>=2.4.2, but you’ll have pyparsing 2.1.4 which is incompatible.

botocore 1.13.50 requires urllib3<1.26,>=1.20; python_version >= “3.4”, but you’ll have urllib3 1.26.6 which is incompatible.

dql 0.5.26 requires python-dateutil<2.7.0, but you’ll have python-dateutil 2.8.1 which is incompatible.

memsql 3.0.0 requires mysqlclient==1.3.13, but you’ll have mysqlclient 1.3.14 which is incompatible.

pymapd 0.19.0 requires thrift==0.11.0, but you’ll have thrift 0.13.0 which is incompatible.

pyopenssl 20.0.1 requires cryptography>=3.2, but you’ll have cryptography 2.9.2 which is incompatible.

snowflake-connector-python 2.1.3 requires certifi<2021.0.0, but you’ll have certifi 2021.5.30 which is incompatible.

snowflake-connector-python 2.1.3 requires pytz<2021.0, but you’ll have pytz 2021.1 which is incompatible.

snowflake-connector-python 2.1.3 requires requests<2.23.0, but you’ll have requests 2.25.1 which is incompatible.

snowflake-connector-python 2.1.3 requires urllib3<1.26.0,>=1.20, but you’ll have urllib3 1.26.6 which is incompatible.

ERROR: After October 2020 you may experience errors when installing or updating packages. This is because pip will change the way that it resolves dependency conflicts.

We recommend you use --use-feature=2020-resolver to test your packages with the new resolver before it becomes the default.

snowflake-connector-python 2.1.3 requires certifi<2021.0.0, but you’ll have certifi 2021.5.30 which is incompatible.

snowflake-connector-python 2.1.3 requires pytz<2021.0, but you’ll have pytz 2021.1 which is incompatible.

snowflake-connector-python 2.1.3 requires requests<2.23.0, but you’ll have requests 2.25.1 which is incompatible.

ERROR: After October 2020 you may experience errors when installing or updating packages. This is because pip will change the way that it resolves dependency conflicts.

We recommend you use --use-feature=2020-resolver to test your packages with the new resolver before it becomes the default.

snowflake-connector-python 2.1.3 requires certifi<2021.0.0, but you’ll have certifi 2021.5.30 which is incompatible.

snowflake-connector-python 2.1.3 requires pytz<2021.0, but you’ll have pytz 2021.1 which is incompatible.

pymapd 0.19.0 requires thrift==0.11.0, but you’ll have thrift 0.13.0 which is incompatible.

memsql 3.0.0 requires mysqlclient==1.3.13, but you’ll have mysqlclient 1.3.14 which is incompatible.

google-auth-httplib2 0.1.0 requires httplib2>=0.15.0, but you’ll have httplib2 0.14.0 which is incompatible.

dql 0.5.26 requires pyparsing==2.1.4, but you’ll have pyparsing 2.3.0 which is incompatible.

dql 0.5.26 requires python-dateutil<2.7.0, but you’ll have python-dateutil 2.8.0 which is incompatible.

black 21.6b0 requires click>=7.1.2, but you’ll have click 6.7 which is incompatible.

Looks like you have an outdated docker-compose.yml file and missed some of the setup steps. I’d recommend starting fresh using this guide to get familiar with the process. Once you have this working it will be easier to navigate setting up Redash in a different production environment. If you have issues with this please open another thread, since your issue is not related to the question at hand.