Hi !https://redash.io/help/open-source/admin-guide/ldap-authentication ) to add LDAP. Where can I add the following lines, according to the admin guide ?

FROM redash/redash:latest

RUN pip install ldap3

I tried two ways (as described here #8294 ) but it doesn’t work.

The only working method for me was to install ldap3 (with pip) in each container (worker, scheduler and server). But this is not a good and permanent way to fix this issue. Any other idea ?

Thanks in advance for your help

I don’t know how you have deployed your version, but I believe that you have used the lastest Docker image.Dockerfile where the following command is written.

FROM redash/redash:latest

you need to add in it, before your deployment

RUN pip install ldap3

It will avoid you to deploy manually the package on each node, and it will be persistant in case a new instance

Thanks for your reply.

This is the file content :

# PATH : /redash/Dockerfile

FROM node:14.17 as frontend-builder

RUN npm install --global --force yarn@1.22.10

# Controls whether to build the frontend assets

ARG skip_frontend_build

ENV CYPRESS_INSTALL_BINARY=0

ENV PUPPETEER_SKIP_CHROMIUM_DOWNLOAD=1

RUN useradd -m -d /frontend redash

USER redash

WORKDIR /frontend

COPY --chown=redash package.json yarn.lock .yarnrc /frontend/

COPY --chown=redash viz-lib /frontend/viz-lib

# Controls whether to instrument code for coverage information

ARG code_coverage

ENV BABEL_ENV=${code_coverage:+test}

RUN if [ "x$skip_frontend_build" = "x" ] ; then yarn --frozen-lockfile --network-concurrency 1; fi

COPY --chown=redash client /frontend/client

COPY --chown=redash webpack.config.js /frontend/

RUN if [ "x$skip_frontend_build" = "x" ] ; then yarn build; else mkdir -p /frontend/client/dist && touch /frontend/client/dist/multi_org.html && touch /frontend/client/dist/index.html; fi

FROM python:3.7-slim-buster

EXPOSE 5000

# Controls whether to install extra dependencies needed for all data sources.

ARG skip_ds_deps

# Controls whether to install dev dependencies.

ARG skip_dev_deps

RUN useradd --create-home redash

# Ubuntu packages

RUN apt-get update && \

apt-get install -y --no-install-recommends \

curl \

gnupg \

build-essential \

pwgen \

libffi-dev \

sudo \

git-core \

# Postgres client

libpq-dev \

# ODBC support:

g++ unixodbc-dev \

# for SAML

xmlsec1 \

# Additional packages required for data sources:

libssl-dev \

default-libmysqlclient-dev \

freetds-dev \

libsasl2-dev \

unzip \

libsasl2-modules-gssapi-mit && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

ARG TARGETPLATFORM

ARG databricks_odbc_driver_url=https://databricks.com/wp-content/uploads/2.6.10.1010-2/SimbaSparkODBC-2.6.10.1010-2-Debian-64bit.zip

RUN if [ "$TARGETPLATFORM" = "linux/amd64" ]; then \

curl https://packages.microsoft.com/keys/microsoft.asc | apt-key add - \

&& curl https://packages.microsoft.com/config/debian/10/prod.list > /etc/apt/sources.list.d/mssql-release.list \

&& apt-get update \

&& ACCEPT_EULA=Y apt-get install -y --no-install-recommends msodbcsql17 \

&& apt-get clean \

&& rm -rf /var/lib/apt/lists/* \

&& curl "$databricks_odbc_driver_url" --location --output /tmp/simba_odbc.zip \

&& chmod 600 /tmp/simba_odbc.zip \

&& unzip /tmp/simba_odbc.zip -d /tmp/ \

&& dpkg -i /tmp/SimbaSparkODBC-*/*.deb \

&& printf "[Simba]\nDriver = /opt/simba/spark/lib/64/libsparkodbc_sb64.so" >> /etc/odbcinst.ini \

&& rm /tmp/simba_odbc.zip \

&& rm -rf /tmp/SimbaSparkODBC*; fi

WORKDIR /app

# Disable PIP Cache and Version Check

ENV PIP_DISABLE_PIP_VERSION_CHECK=1

ENV PIP_NO_CACHE_DIR=1

# rollback pip version to avoid legacy resolver problem

RUN pip install pip==20.2.4;

# We first copy only the requirements file, to avoid rebuilding on every file change.

COPY requirements_all_ds.txt ./

RUN if [ "x$skip_ds_deps" = "x" ] ; then pip install -r requirements_all_ds.txt ; else echo "Skipping pip install -r requirements_all_ds.txt" ; fi

COPY requirements_bundles.txt requirements_dev.txt ./

RUN if [ "x$skip_dev_deps" = "x" ] ; then pip install -r requirements_dev.txt ; fi

COPY requirements.txt ./

RUN pip install -r requirements.txt

COPY --chown=redash . /app

COPY --from=frontend-builder --chown=redash /frontend/client/dist /app/client/dist

RUN chown redash /app

USER redash

ENTRYPOINT ["/app/bin/docker-entrypoint"]

CMD ["server"]

Is it the good file ? Where can I insert the command RUN pip install ldap3 ? Is it possible to rebuild the instance without redeployment ?

Thanks again

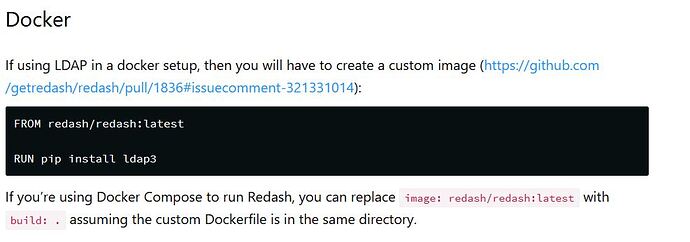

According to the admin guide (cf. capture), I use docker-compose and I have a docker-compose.yml file in the same directory.

I really don’t understand what happens with my configuration.Dockerfile.ldap as :

FROM redash/redash:latest

RUN pip install --user ldap3

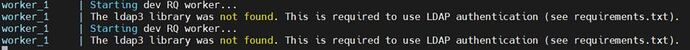

And then, I tried : docker build -f Dockerfile.ldap . --no-cachedocker-compose logs -f --tail 5, this is the result :

Any idea ?

This is my docker-compose.yml configuration (concerning LDAP) :

REDASH_LDAP_LOGIN_ENABLED: "true"

REDASH_PASSWORD_LOGIN_ENABLED: "false"

REDASH_LDAP_URL: "XXX.XXX.XX.XX:389"

REDASH_LDAP_BIND_DN: "..."

REDASH_LDAP_BIND_DN_PASSWORD: "..."

REDASH_LDAP_DISPLAY_NAME_KEY: "..."

REDASH_LDAP_DISPLAY_EMAIL_KEY: "..."

REDASH_LDAP_CUSTOM_USERNAME_PROMPT: "..."

Thanks again

GymYJ

September 15, 2022, 3:57am

6

in my case, I added ‘build’ context in the docker-compose.yml file.

version: "2"

x-redash-service: &redash-service

image: redash/redash:8.0.0.b32245

build:

context: .

dockerfile: Dockerfile

depends_on:

- postgres

- redis

env_file: /opt/redash/env

restart: always

services:

...

and wrote a Dockerfile in same directory with the docker-compose.yml

FROM redash/redash:latest

RUN pip install ldap3 --user

and then run docker-compose

$ sudo docker-compose run --rm server create_db

$ sudo docker-compose up -d

![]()