Following @ariarijp pull request on changing the way we find query IDs in Query Results queries to later support queries with parameters, I wanted to start a discussion on how this might work and to share some thoughts I had:

There are two use cases for querying from queries with parameters:

1.

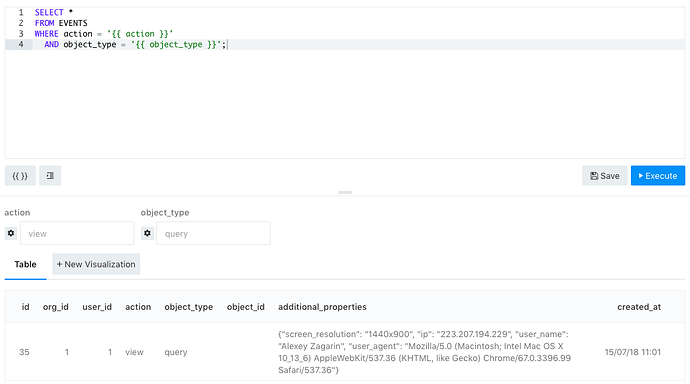

The simple use case: you just want to use a query with parameters and you “know” what the values are:

- We can detect that the query you’re querying from needs parameters and show the needed parameter input fields and pass those values along when loading this query (will require adding the logic of detecting query IDs in client side code).

- Allow for some syntax of passing parameters like @ariarijp suggested (although I feel that using JSON is not very user friendly, although very pragmatic).

2.

There is another more advanced use case: you want to pass returning values from one query into another. Here’s an example:

Datasource A has users list, datasource B has user events. You want to return list of active users in the past day along with their details. So you need to filter the users list in A based on ids from B.

So the query A on data source A might be:

SELECT name FROM users WHERE id IN ({{user_ids}});

The query B on B might be:

SELECT distinct user_id FROM events WHERE created_at > '...';

And you want the results from query B to be merged into the the query A.

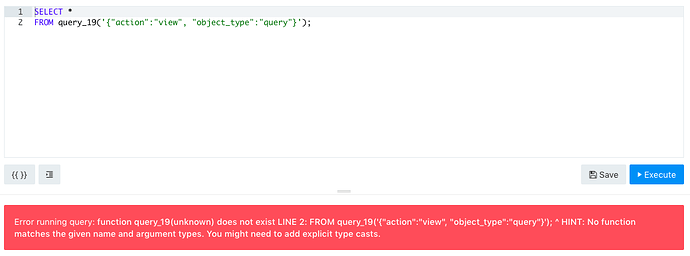

Maybe this doesn’t have to be implemented as part of query results feature, though? Maybe it can be some syntax like parameters:

SELECT name FROM users WHERE id IN ({% query_results 456 %});

query_results is a function to load query results and we can have others to insert snippets and maybe other things?

I hope the explanation makes sense. Of course we can implement use case 1 without implementing use case 2.

Any feedback or thoughts will be welcomed

)

)